Here are my introductory thoughts on neural networks:

- Training nets with data which has a known confidence interval or statistical basis -- the known accuracy of the inputs could be used to determine a convergence criteria (i.e. no need to be an expert on Mickey Mouse).

- Generally, thinking about the concept that the higher the input/output ratio, the stronger or more robust the output.

- Explicit, parallel co-update of connections and nodes.

- Connection weights could be visualised by adjusting the connection length, giving a visual clustering, and animating the learning process.

- Adaptive networking which adds connection "short-cuts" along heavily trafficked routes from input to output; and generally adaptive networking.

- The irrelevance of directionality in an explicitly (in the temporal sense) integrated network, which can be thought of more as a "sponge" than a serial machine.

- Because my framework does away with directionality, there are not clear input/output groups for each node. Thus I'll try having two variables in each node - an "internal" value which is the sum of the connected nodes times their respective weights, and an "external" value which is presented to the neighbouring nodes. The internal value is processed using a basis function to determine the external value, which is then used by adjacent nodes to make decisions.

- Input and output nodes need not be ordered, or even adjacent.

- Simultaneous training and guessing... no need to separate these phenomena apart from the fact that when it is possible to estimate the error, corrections are attempted.

- The use of an array of basis functions as a meta-degree of freedom is something I'm willing to try. At least globally at first, then maybe locally. Would likely disturb any gradient-based optimisation too much.

- Lastly, after talking to Karin about my maturity level, I realised that human pride is similar to a "learning speed" parameter I've read about being used to stabilise the learning process. How, um, useful...

One of my first efforts will be to have the mouse produce a yellow dot. The image will be fed to the net, which will estimate the mouse's [X, Y] position. The actual position will be used to train the network. This will occur within the animation loop of SpringThing, and thus the (hopefully) studious reaction of the network to the moving mouse will be animated in realtime, either by colouration of the connections by weight, or by adjustment of connection length.

One of my first efforts will be to have the mouse produce a yellow dot. The image will be fed to the net, which will estimate the mouse's [X, Y] position. The actual position will be used to train the network. This will occur within the animation loop of SpringThing, and thus the (hopefully) studious reaction of the network to the moving mouse will be animated in realtime, either by colouration of the connections by weight, or by adjustment of connection length.Check out SpringThing (sample shown at right) to see what I'll be starting with.

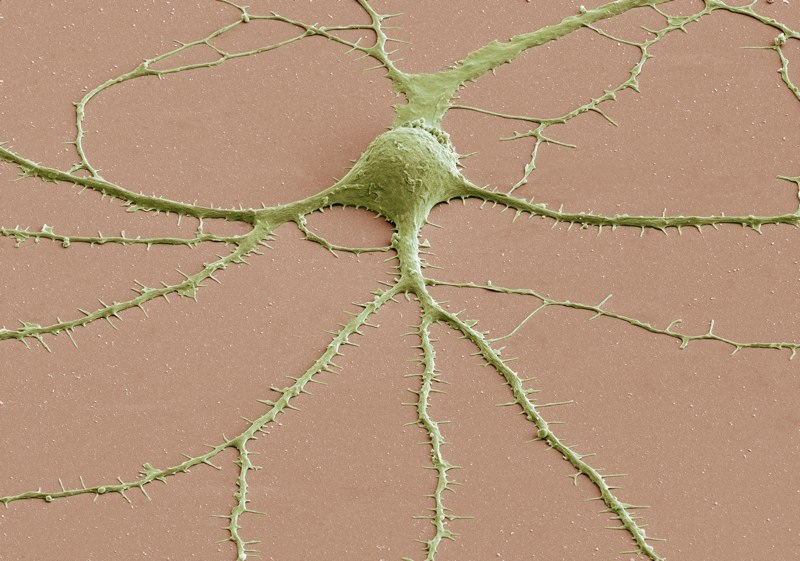

Here's a great recent interview with a guy doing hardware studies:

http://www.nanotech-now.com/news.cgi?story_id=37079

There are a lot of really great looking packages that I'm avoiding for now. I plan to get some training and optimisation ideas from them though, as that seems to be the most difficult aspect of this project.

http://www.ire.pw.edu.pl/~rsulej/NetMaker/

No comments:

Post a Comment